Description:-

An AI virtual mouse is a form of technology designed to simulate the behaviour of an actual mouse. It is often used in robotics and artificial intelligence research to train and test algorithms. AI virtual mouse can be programmed to move and interact with objects in a virtual environment and can be used to test and develop new algorithms and techniques. AI virtual mice can also be used to create virtual simulations of real-world environments, allowing researchers to test algorithms in a realistic setting.

The algorithm used in the system makes use of the machine learning algorithm. Based on hand gestures, the computer can be controlled virtually and can perform left-click, right-click, scrolling functions, and computer cursor functions without the use of the physical mouse. The algorithm is based on deep learning for detecting the hands. Hence, the proposed system will avoid COVID-19 spread by eliminating human intervention and dependency on devices to control the computer.

Algorithm Used for Hand Tracking

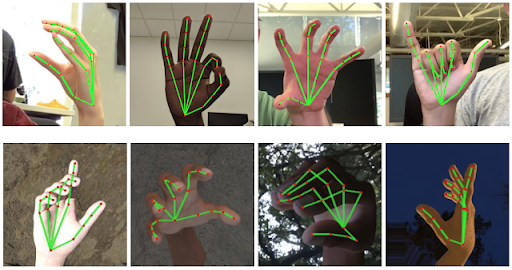

For the purpose of detection of hand gestures and hand tracking, the MediaPipe framework is used, and OpenCV library is used for computer vision. The algorithm makes use of the machine learning concepts to track and recognize the hand gestures and hand tip.

What is MediaPipe?

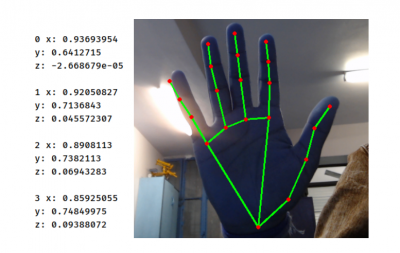

Mediapipe is an open-source machine learning library of Google, which has some solutions for face recognition and gesture recognition and provides encapsulation of python, js and other languages. MediaPipe Hands is a high-fidelity hand and finger tracking solution. It uses machine learning (ML) to infer 21 key 3D hand information from just one frame. We can use it to extract the coordinates of the key points of the hand.

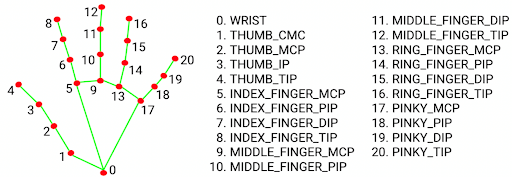

Hand Landmark Model Bundle

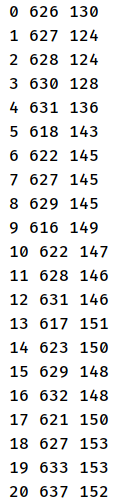

The hand landmark model bundle detects the keypoint localization of 21 hand-knuckle coordinates within the detected hand regions. The model was trained on approximately 30K real-world images and several rendered synthetic hand models imposed over various backgrounds. The definition of the 21 landmarks is given below.

The aim of this tutorial is to help you understand how to develop an interface that will capture human hand gestures dynamically and control the volume level.

OpenCV:-

OpenCV is a computer vision library which contains image-processing algorithms for object detection . OpenCV is a library of python programming language, and real-time computer vision applications can be developed by using the computer vision library. The OpenCV library is used in image and video processing and also analysis such as face detection and object detection .

Steps to do Hand Tracking

Step 1. Install media pipe library.

Step 2. Import necessary libraries. Also, initialize the mediapipe holistic function.

Step 3. Initialize the webcam capture function.

Step 4. Define mediapipe holistic function

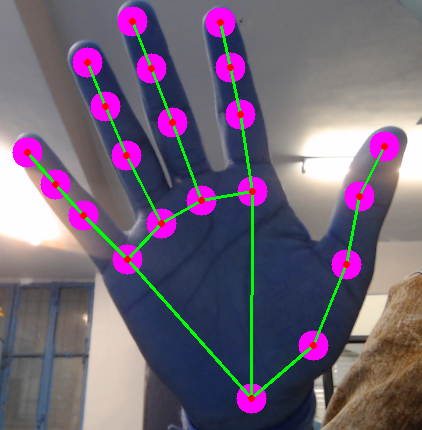

Create an instance of the hand’s module provided by Mediapipe followed by an instance of Mediapipe’s drawing utility. The drawing utility helps to draw those 21 landmarks and the lines connecting those landmarks on your image or frame(which you have noticed in the above video).

Step 5. Real time hand detection

Now, read the webcam value in a while function. Also, convert the image from BGR to RGB.

Step 6. Find the location of 21 landmarks on the hand.

Note: This is the new id of the hand connection.

Step 7. Pixel Conversion

In the output, you can see the decimal values. Convert these decimal values into pixels.

Output:-

Step 8. Now, start writing the class to detect hand tip.

Note:

max_num_hands: The number of hands that you want Mediapipe to detect. Mediapipe will return an array of hands and each element of the array(or a hand) would in turn have its 21 landmark points

min_detection_confidence, min_tracking_confidence: When the Mediapipe is first started, it detects the hands. After that, it tries to track the hands as detecting is more time-consuming than tracking. If the tracking confidence goes down the specified value then again it switches back to detection.

All these parameters are needed by the Hands() class, so we pass them to the Hands class in the next line.

Step 9: Define findHands function to find the hand landmark in the image.

Function Arguments: In case, the image has multiple hands, our function would return landmarks for only the specified hand number. A Boolean parameter draw decides if we want the Medapipe to draw those landmarks on our image. The next line does everything. This small line actually is doing a lot behind the scenes and is getting all the landmarks for you.

Step 10: Now, make a function to find the location of hand landmarks.

Step 11. Define the main() function for call class method functions.

Note: Now, our hand landmarks detection part is complete. Check how can you control the computer volume through the index finger tip and thumb tip.

Volume Controller:.Before we write any of our custom code, we need to install an external python package – pycaw. This library will handle the controlling of our system volume.

Steps to Create AI Virtual Mouse

Step 1. Make a new Python file & import the necessary libraries.

Step 2. Initialize the webcam capture.

Step 3. Call hand_tracking class

Now, call the hand detector from hand_tracking class with 0.7 with one hand

Step 4 . Read the webcam and put the image with the detector find_hands function to detect handland marks.

Step 6. Get the tip of the index and the middle fingers.

Step 7. Map the cursor with the index finger to move the mouse.

Step 8. Set the clicking mode if both the index finger and middle finger are up.

Step 9. Now, display the image to see the output.

Output:-

Conclusion :-

Creating an AI virtual mouse is exciting with many potential applications. By developing a virtual mouse that can navigate through environments and interact with objects, we can create a powerful AI agent that can help us with various tasks. Hope you understood the tutorial for creating AI virtual mouse using hand gesture recognition. Have any questions related to the tutorial? Feel free to ask by leaving your comments below.

Also if you wish to know – How to Make Your Own AI Virtual Assistant

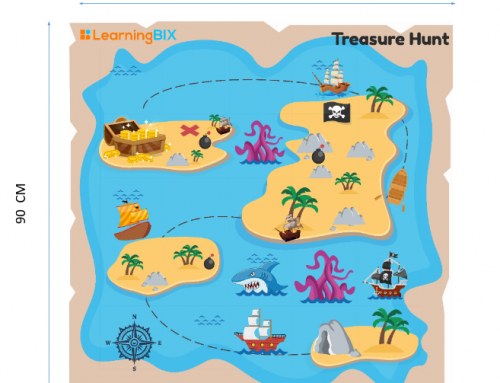

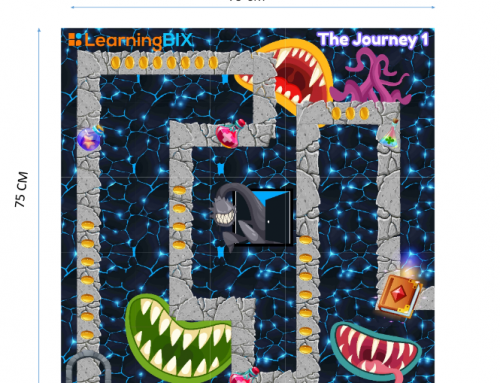

Visit Learning Bix for more updates

Leave A Comment