Description:-

Gesture recognition is a technology that enables machines to interpret and understand human gestures through machine vision, image processing, and computer vision techniques. This technology can recognize hand gestures, facial expressions, and other movements. It can be used in virtual reality applications, gaming, and robotics.

Gesture recognition can improve the user experience in various applications, allowing for a more natural way of interacting with a computer system. It can also be used in security systems, allowing for more accurate and secure identification of people.

NumPy is a Python programming language library that adds support for large, multi-dimensional arrays and matrices, along with a large collection of high-level mathematical functions to operate on these arrays.

What is MediaPipe?

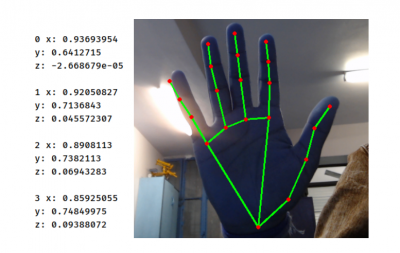

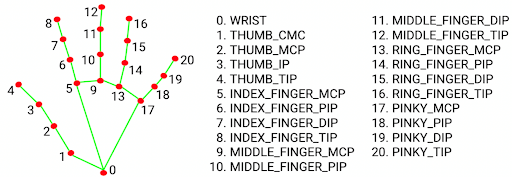

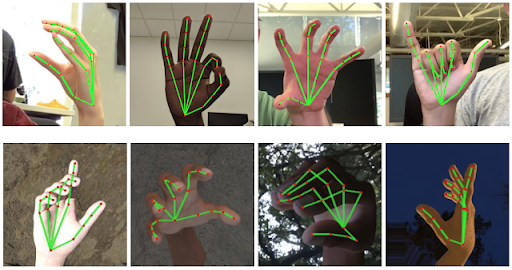

Mediapipe is an open-source machine learning library of Google, which has some solutions for face recognition and gesture recognition and provides encapsulation of Python, js, and other languages. MediaPipe Hands is a high-fidelity hand and finger tracking solution. It uses machine learning (ML) to infer 21 key 3D hand information from just one frame. We can use it to extract the coordinates of the key points of the hand.

Hand Landmark Model Bundle

The hand landmark model bundle detects the key point localization of 21 hand-knuckle coordinates within the detected hand regions. The model was trained on approximately 30K real-world images and several rendered synthetic hand models imposed over various backgrounds. The definition of the 21 landmarks is given below.

The aim of this tutorial is to help you understand how to develop an interface that will capture human hand gestures dynamically and control the volume level.

Working Principle

We use the camera of our device for this project. The camera detects our hand with points in it so that it can see the distance between our thumb fingertip and the index fingertip. The distance between points 4 and 8 is directly proportional to the volume of the device.

Methodology/Approach

- Detect hand landmarks

- Calculate the distance between thumb tip and index fingertip.

- Map the distance of the thumb tip and index fingertip with volume range. In this case, the distance between the thumb tip and index fingertip was within the range of 30 – 350 and the volume range was from -63.5 – 0.0.

- In order to exit, press ‘Spacebar

Guide to Control Volume Using Hand Gesture

Step 1. Install media pipe library.

Step 2. Import necessary libraries. Also, initialize the mediapipe holistic function.

Step 3. Initialize the webcam capture function.

Step 4. Define mediapipe holistic function

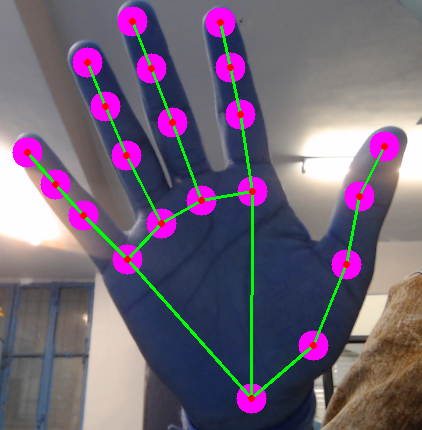

Create an instance of the hand’s module provided by Mediapipe followed by an instance of Mediapipe’s drawing utility. The drawing utility helps to draw those 21 landmarks and the lines connecting those landmarks on your image or frame(which you have noticed in the above video).

Step 5. Real time hand detection

Now, read the webcam value in a while function. Also, convert the image from BGR to RGB.

Step 6. Find the location of 21 landmarks on the hand.

Note: This is the new id of the hand connection.

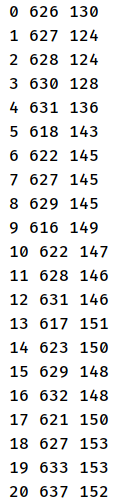

Step 7. In the output, you can see the decimal values. Convert these decimal values into pixels.

Output:-

Step 8. Now, start writing the class to detect hand tip.

Note:

max_num_hands: The number of hands that you want Mediapipe to detect. Mediapipe will return an array of hands and each element of the array(or a hand) would in turn have its 21 landmark points

min_detection_confidence, min_tracking_confidence: When the Mediapipe is first started, it detects the hands. After that, it tries to track the hands as detecting is more time-consuming than tracking. If the tracking confidence goes down the specified value then again it switches back to detection.

All these parameters are needed by the Hands() class, so we pass them to the Hands class in the next line.

Step 9: Define findHands function to find the hand landmark in the image.

Function Arguments: In case, the image has multiple hands, our function would return landmarks for only the specified hand number. A Boolean parameter draw decides if we want the Medapipe to draw those landmarks on our image. The next line does everything. This small line actually is doing a lot behind the scenes and is getting all the landmarks for you.

Step 10: Now, make a function to find the location of hand landmarks.

Step 11. Define the main() function for call class method functions.

Note: Now, our hand landmarks detection part is complete. Check how can you control the computer volume through the index finger tip and thumb tip.

Volume Controller:.Before we write any of our custom code, we need to install an external python package – pycaw. This library will handle the controlling of our system volume.

Steps to Control the Volume with Hand Gestures

Step 1. Make a new Python file & import the necessary libraries.

Step 2. Initialize the webcam capture.

Step 3. Call hand_tracking class

Now, call the hand detector from hand_tracking class with 0.7 with one hand

Step 4. Control audio utilities

Now, define a function to control audio utilities of the computer to interface computer volume with distance b/w index tip and thumb tip.

Step 5. Define some variables related to the volume bar.

Step 6. Read the webcam and filter the fingers by bbox area.

Step 7. Find the distance b/w the thumb and index finger using the findDistance function.

Step 8. Now, set the volume.

Step 9. Draw the rectangle shape of the volume bar and put this shape on the webcam.

Output:-

Conclusion :-

Wrapping up, the tutorial here definitely provided you with insight into creating a hand gesture volume controller. So, next time you are enjoying music while working and want to control the volume of the music. If you are working while listening to your favourite music, you can easily control the volume level of your music with just a gesture of your hand.

Leave A Comment

Related Posts

Coding is generally considered a boring activity. After all, who wants to sit in front of a computer all day writing in a language that can’t even be read? But that is not all there is to code. It can be used for some really fun coding facts stuff, and there is so much amazing work that you can do only if you knew how to code.

5 Coding Facts That Blow Your Mind

Let us look at five great fun coding facts you might not know about coding.

You Can Make Games With Code

Coding is an umbrella term for the scores of languages and their versions that programmers use to make their applications. We have all played games, on consoles, our mobile phones, or our desktop computers and laptops, at some point in our life. It might not surprise you to know that these games are also created using code. The complex physics of the characters in these games, the design of the environment of the games, and each minute movement in the games have a piece of code behind them.

Game designers typically write in languages such as C++, C#, and Java. These are also some of the most popular kids coding languages, especially for children who like gaming. Coding courses are available widely in all of these languages and the broad domain of game design.

You Do Better At School If You Code

Making games and indulging in the fun applications of coding is all fine, but coding can have great advantages at school as well. Once you start taking classes that teach coding for kids, you will realize that coding requires a lot of brainpower as well. Coding even for the most fun tasks requires you to think quite a bit, and this sharpens your mind and increases your capability to think logically.

This logical capability can be of a lot of use to you at school. Especially in subjects like mathematics, you might find yourself topping the class simply because of the practice you got during coding! In fact, coding and mathematics have a kind of symbiotic relationship – what you learn in maths comes of use in code and vice versa.

You Can Follow Your Interest Using Coding

Regardless of what your favorite subject is, or what fields you are interested in, you will find a use for code everywhere. Be it through developing software, creating an all-new app, making a game, or building a simple utility, you will find that coding facts can be a way to enable you to follow your interests through a different path.

All subjects from science to social studies and from mathematics to philosophy use coding in some way for research or education. Be it sports or music, art or architecture, utilities that are made using code are prevalent in every field that you can think of. Taking simple online coding courses can qualify you and build your interest in creating such utilities.

You Can Predict Future Events Through Code

Did you know that predicting the future is an application of coding! Predictive modeling is a field of programming in which code is used to try and predict what will happen in the future on the basis of events that took place in the past. It uses concepts of artificial intelligence and machine learning to create algorithms that learn the behavior of past data and determine the course of future data.

Predictive modeling is one of the most futuristic applications of code and is used to determine everything from the next movie you will like on Netflix to whether it will rain tomorrow. You can opt for closing classes in machine learning to know more about the field, and create your own utilities to predict the future!

Coding Is Free!

You don’t need any sophisticated apparatus except your laptop for coding. All you need is the will to learn more and follow your interests through code. To learn to code you do not need to go to a special school or have any special capabilities. You can opt for free coding classes for kids which are held completely online and follow a completely hands-off approach in helping kids learn to code. There are also a vast number of coding sites for kids on which they can log in to learn basic coding facts for kids without even having to enroll in a class.

Conclusion

The future is already being written, and it is being written in code. Coding for kids classes can help kids of all ages currently going to school not just learn to code but also to have fun in the process. The above applications of code can be a major stepping stone to build the interest of kids in coding, after which they can hone their interests and new skills on even more advanced applications. A platform such as Learningbix can be an excellent way for you to get started.

Coding is generally considered a boring activity. After all, who wants to sit in front of a computer all day writing in a language that can’t even be read? But that is not all there is to code. It can be used for some really fun coding facts stuff, and there is so much amazing work that you can do only if you knew how to code.

5 Coding Facts That Blow Your Mind

Let us look at five great fun coding facts you might not know about coding.

You Can Make Games With Code

Coding is an umbrella term for the scores of languages and their versions that programmers use to make their applications. We have all played games, on consoles, our mobile phones, or our desktop computers and laptops, at some point in our life. It might not surprise you to know that these games are also created using code. The complex physics of the characters in these games, the design of the environment of the games, and each minute movement in the games have a piece of code behind them.

Game designers typically write in languages such as C++, C#, and Java. These are also some of the most popular kids coding languages, especially for children who like gaming. Coding courses are available widely in all of these languages and the broad domain of game design.

You Do Better At School If You Code

Making games and indulging in the fun applications of coding is all fine, but coding can have great advantages at school as well. Once you start taking classes that teach coding for kids, you will realize that coding requires a lot of brainpower as well. Coding even for the most fun tasks requires you to think quite a bit, and this sharpens your mind and increases your capability to think logically.

This logical capability can be of a lot of use to you at school. Especially in subjects like mathematics, you might find yourself topping the class simply because of the practice you got during coding! In fact, coding and mathematics have a kind of symbiotic relationship – what you learn in maths comes of use in code and vice versa.

You Can Follow Your Interest Using Coding

Regardless of what your favorite subject is, or what fields you are interested in, you will find a use for code everywhere. Be it through developing software, creating an all-new app, making a game, or building a simple utility, you will find that coding facts can be a way to enable you to follow your interests through a different path.

All subjects from science to social studies and from mathematics to philosophy use coding in some way for research or education. Be it sports or music, art or architecture, utilities that are made using code are prevalent in every field that you can think of. Taking simple online coding courses can qualify you and build your interest in creating such utilities.

You Can Predict Future Events Through Code

Did you know that predicting the future is an application of coding! Predictive modeling is a field of programming in which code is used to try and predict what will happen in the future on the basis of events that took place in the past. It uses concepts of artificial intelligence and machine learning to create algorithms that learn the behavior of past data and determine the course of future data.

Predictive modeling is one of the most futuristic applications of code and is used to determine everything from the next movie you will like on Netflix to whether it will rain tomorrow. You can opt for closing classes in machine learning to know more about the field, and create your own utilities to predict the future!

Coding Is Free!

You don’t need any sophisticated apparatus except your laptop for coding. All you need is the will to learn more and follow your interests through code. To learn to code you do not need to go to a special school or have any special capabilities. You can opt for free coding classes for kids which are held completely online and follow a completely hands-off approach in helping kids learn to code. There are also a vast number of coding sites for kids on which they can log in to learn basic coding facts for kids without even having to enroll in a class.

Conclusion

The future is already being written, and it is being written in code. Coding for kids classes can help kids of all ages currently going to school not just learn to code but also to have fun in the process. The above applications of code can be a major stepping stone to build the interest of kids in coding, after which they can hone their interests and new skills on even more advanced applications. A platform such as Learningbix can be an excellent way for you to get started.

Coding is generally considered a boring activity. After all, who wants to sit in front of a computer all day writing in a language that can’t even be read? But that is not all there is to code. It can be used for some really fun coding facts stuff, and there is so much amazing work that you can do only if you knew how to code.

5 Coding Facts That Blow Your Mind

Let us look at five great fun coding facts you might not know about coding.

You Can Make Games With Code

Coding is an umbrella term for the scores of languages and their versions that programmers use to make their applications. We have all played games, on consoles, our mobile phones, or our desktop computers and laptops, at some point in our life. It might not surprise you to know that these games are also created using code. The complex physics of the characters in these games, the design of the environment of the games, and each minute movement in the games have a piece of code behind them.

Game designers typically write in languages such as C++, C#, and Java. These are also some of the most popular kids coding languages, especially for children who like gaming. Coding courses are available widely in all of these languages and the broad domain of game design.

You Do Better At School If You Code

Making games and indulging in the fun applications of coding is all fine, but coding can have great advantages at school as well. Once you start taking classes that teach coding for kids, you will realize that coding requires a lot of brainpower as well. Coding even for the most fun tasks requires you to think quite a bit, and this sharpens your mind and increases your capability to think logically.

This logical capability can be of a lot of use to you at school. Especially in subjects like mathematics, you might find yourself topping the class simply because of the practice you got during coding! In fact, coding and mathematics have a kind of symbiotic relationship – what you learn in maths comes of use in code and vice versa.

You Can Follow Your Interest Using Coding

Regardless of what your favorite subject is, or what fields you are interested in, you will find a use for code everywhere. Be it through developing software, creating an all-new app, making a game, or building a simple utility, you will find that coding facts can be a way to enable you to follow your interests through a different path.

All subjects from science to social studies and from mathematics to philosophy use coding in some way for research or education. Be it sports or music, art or architecture, utilities that are made using code are prevalent in every field that you can think of. Taking simple online coding courses can qualify you and build your interest in creating such utilities.

You Can Predict Future Events Through Code

Did you know that predicting the future is an application of coding! Predictive modeling is a field of programming in which code is used to try and predict what will happen in the future on the basis of events that took place in the past. It uses concepts of artificial intelligence and machine learning to create algorithms that learn the behavior of past data and determine the course of future data.

Predictive modeling is one of the most futuristic applications of code and is used to determine everything from the next movie you will like on Netflix to whether it will rain tomorrow. You can opt for closing classes in machine learning to know more about the field, and create your own utilities to predict the future!

Coding Is Free!

You don’t need any sophisticated apparatus except your laptop for coding. All you need is the will to learn more and follow your interests through code. To learn to code you do not need to go to a special school or have any special capabilities. You can opt for free coding classes for kids which are held completely online and follow a completely hands-off approach in helping kids learn to code. There are also a vast number of coding sites for kids on which they can log in to learn basic coding facts for kids without even having to enroll in a class.

Conclusion

The future is already being written, and it is being written in code. Coding for kids classes can help kids of all ages currently going to school not just learn to code but also to have fun in the process. The above applications of code can be a major stepping stone to build the interest of kids in coding, after which they can hone their interests and new skills on even more advanced applications. A platform such as Learningbix can be an excellent way for you to get started.